The world of artificial intelligence (AI) has experienced exponential growth and has an insatiable hunger to consume data. From self-driving cars to chatbots that mimic human conversation, AI is revolutionizing industries at a blistering pace. AI’s power is derived from its data backbone. Access to data, the speed of its processing, and scalable storage performance are all pivotal factors that determine the efficiency of an AI pipeline. This is where Qumulo has proved itself to be the best data storage solution on the planet for AI workloads, as the industry’s highest performance and most cost-effective file data solution in the cloud.

Why Qumulo is Ideal for File-based AI Workloads

AI applications, be it deep learning models or neural networks, require a unique set of storage characteristics, all of which are satisfied by Qumulo:

- Scalability: AI datasets are dynamic. They grow over time as more data is collected and processed. Qumulo’s ability to scale with predictable high performance ensures that as AI workloads grow, Qumulo can meet its demands at any scale.

- Cost-effectiveness: Funding AI initiatives can be a significant investment. Saving on storage costs without compromising on performance can free up resources for other critical areas, be it research, development, or production deployments.

- Ability to Scale AnywhereTM: Infrastructure owners and data scientists benefit from the flexibility of training in one location, but deploying in another, with highly secure infrastructure. Qumulo’s software-defined storage system can be deployed and run anywhere. This makes it easy to train an AI model in the core data center, but push to production anywhere.

- Performance: AI models, especially those used in scenarios like autonomous vehicles or financial transactions, need real-time data access for pre and post model training. Qumulo’s high-speed data retrieval ensures that data is available the moment it’s required.

Let’s drill into bullet #4 and underscore the importance of seamless and lightning-fast retrieval of data / metadata. This is vital for AI applications that require scalable file storage – on premises or in the cloud.

Through testing synthetic AI workloads, we found that we are indeed the fastest file-based cloud solution on the market for AI, where data scientists can use Qumulo for data collection, pre-training, production training, and ongoing inference — no matter the scale.

Read on.

Widely Applicable AI Benchmark

To put Qumulo’s performance capabilities into perspective, let’s dive into the latest result achieved with Qumulo running in the cloud on AWS infrastructure. We used SPECstorage to characterize AI performance on Qumulo. This benchmark (aptly named AI_Image) leverages file sizes and I/O patterns that synthetically and accurately exercise common AI workloads:

- Based on Tensorflow best practices – world’s most widely deployed AI/ML framework

- Traced from 3 different models: Resnet, VGG (Visual Geometry Group), and SSD (Single shot detector)

- Using open source datasets from CityScape, ImageNet, and COCO

Due to Tensorflow’s ubiquity in the AI space, the benchmark applies to a wide range of AI model workloads that produce AI results for:

- Image Classification and Object Detection

- Natural Language Processing (NLP)

- Speech Recognition

- Recommendation Systems

- Generative Models

- Healthcare and Life Sciences

…and MANY more

Benchmark Description and Results Achieved

The goal of the benchmark is to serve data quickly from Qumulo storage to the application layer (using GPUs) running the AI jobs. The benchmark tests the storage performance and latency from a realistic set of I/O patterns from a batch of clients. The clients incrementally increase their number of AI jobs until they reach the target, which in the case of this test is a total of 480 jobs. There are four main operations in the benchmark, with 4 independent concurrent sub-workloads:

- AI_SF – Reads of small image files

- AI_TF – Writes out larger files (ideally 100 MB+ files)

- AI_TR – Reads in large TFRecords

- AI_CP – Performs occasional checkpointing

Results

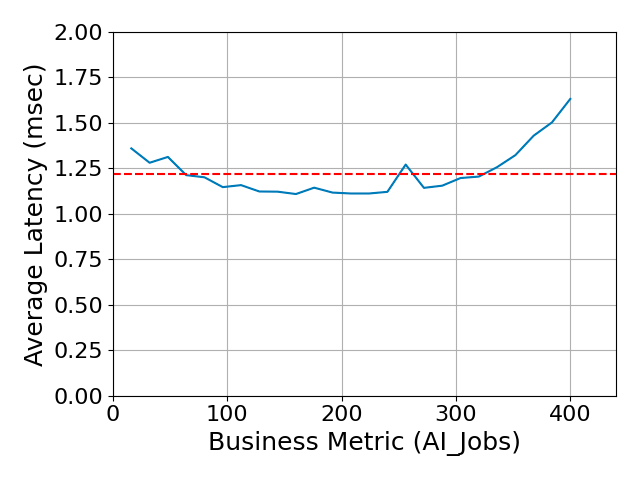

Fig. 1 below displays the following results:

- X axis shows the number of jobs running the AI benchmark over time

- Y axis shows the overall latency during the length of the test

- Latency shows the storage performance fast and predictable as the number of AI jobs increase!

Fig. 1

** Comparison based on best performing systems in Public Clouds published at www.spec.org as of October 2023. SPEC® and the benchmark name SPECgeneric® are registered trademarks of the Standard Performance Evaluation Corporation. For more information about SPECstorage2020, see https://www.spec.org/storage2020/.

On Premises Applicability

While the SPECstorage benchmark tests used a cloud-based environment, these results can be easily extrapolated to estimate results using similar on-premises hardware. When Qumulo publishes this benchmark into SPEC’s website (ETA December/2023), the details and cost of the environment can be found, noting the EC2 instance types (number of cores, available memory,etc.) used and the bandwidth available in the network environment. In the meantime, we include the supplementary details in the appendix of this blog for curious readers.

Data Scientists and Data Engineers, Behold. Try it Yourself!

In the rapidly advancing world of AI, having a robust, fast, and scalable storage solution is not a luxury but a necessity. Qumulo, with its industry-leading performance and cost-effectiveness, stands out as the go-to cloud-based file solution for AI workloads. The benchmark not only underscores Qumulo’s prowess but also cements its position as the fastest and most widely applicable storage solution for AI.

See full results published on Spec.org

Appendix

Performance

Overall response time= 1.22 msec

|

Product and Test Information

| Qumulo – Public Cloud Reference | |

|---|---|

| Tested by | Qumulo, Inc. |

| Hardware Available | November 2023 |

| Software Available | November 2023 |

| Date Tested | November 2023 |

| License Number | 6738 |

| Licensee Locations | Seattle, WA USA |

Qumulo is a hybrid cloud file storage solution that boasts exabyte-plus scalability in a single namespace, identical features whether on-prem or in the cloud, and full multi-protocol support, ensuring flexibility and compatibility across diverse applications. By seamlessly integrating with public cloud infrastructure, Qumulo delivers unstructured data storage at any scale, with real-time visibility into storage performance and data usage.

Qumulo’s cloud-native file system empowers organisations to seamlessly migrate file-based applications and workloads to the public cloud environment. With Qumulo, businesses can efficiently manage exabytes of data, whether on-premises or in the cloud. The following findings clearly demonstrate that the Qumulo filesystem excels in delivering outstanding performance when deployed on AWS.

Solution Under Test Bill of Materials

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

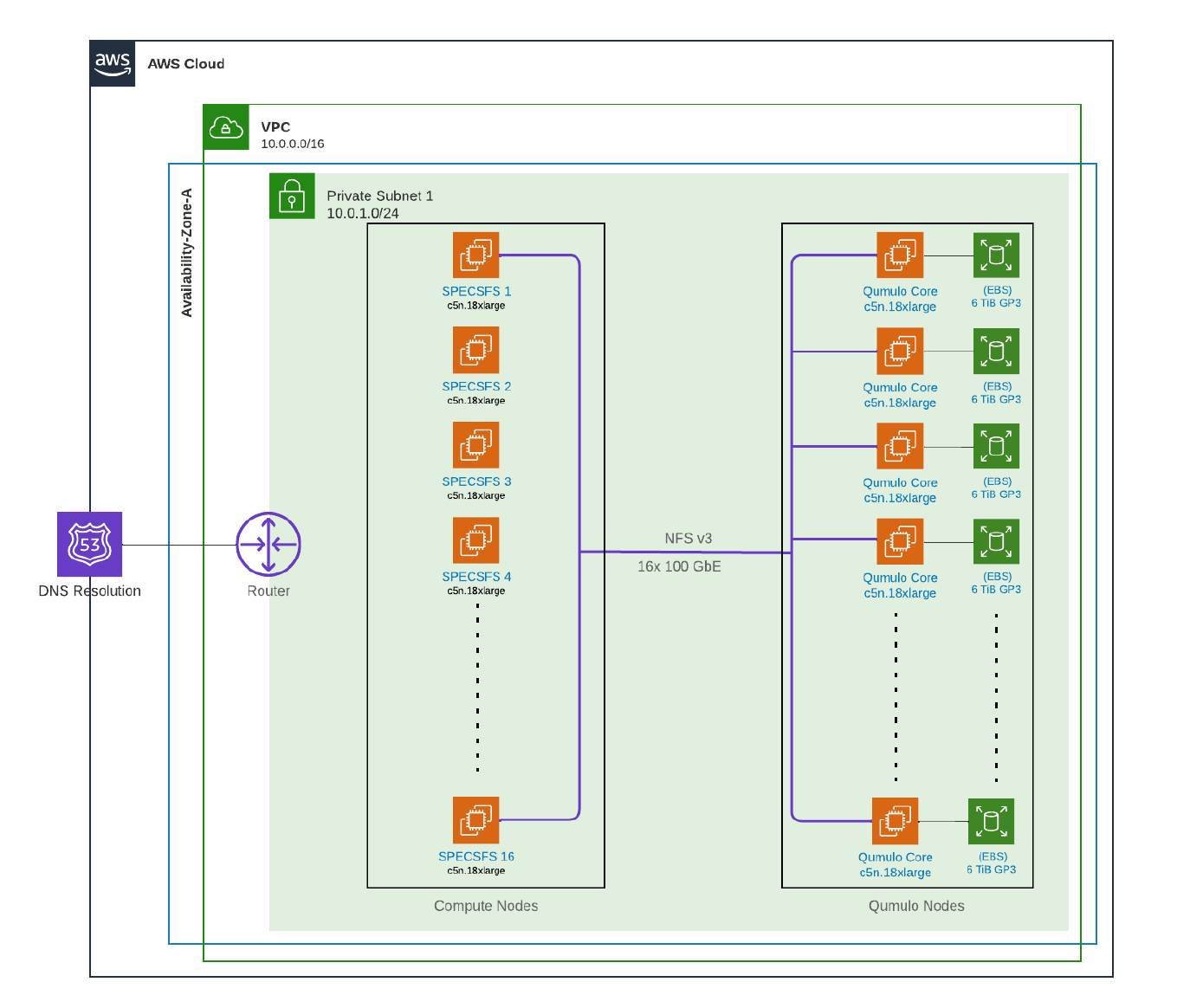

| 1 | 16 | AWS EC2 Instances | AWS | c5n.18xlarge | Qumulo nodes – Amazon c5n EC2 instances (c5n.18xlarge instances have 72 vCPU, 192GiB of memory, 100Gbps networking) |

| 2 | 16 | AWS EC2 Instances | AWS | c5n.18xlarge | Ubuntu Clients – Qumulo cluster – Amazon c5n EC2 instances (c5n.18xlarge instances have 72 vCPU, 192GiB of memory, 100Gbps networking) |

Configuration Diagrams

Qumulo in AWS

Component Software

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | Qumulo Core | File System | 6.2.2 | Qumulo’s cloud-native file system allows organisations to effortlessly move file-based applications and workloads to the public cloud. |

| 2 | Ubuntu | Operating System | 22.04 | The Ubuntu operating system is deployed on the sixteen c5n.18xlarge compute nodes. They are used as clients running the SPEC Storage 2020 benchmarks. |

Hardware Configuration and Tuning – Physical

| Component Name | ||

|---|---|---|

| Parameter Name | Value | Description |

| SR-IOV | Enabled | Enables CPU virtualization technology |

| Port Speed | 100 GbE | Each node has 100 GbE connectivity |

Hardware Configuration and Tuning Notes

None

Software Configuration and Tuning – Virtual

| Networking | ||

|---|---|---|

| Parameter Name | Value | Description |

| Jumbo Frames | 9001 | Enables Ethernet jumbo frames up to 9001 bytes |

| Ubuntu Clients NFS Mount Parameters | ||

| Parameter Name | Value | Description |

| vers | 3 | Use NFSv3 |

| nconnect | 16 | Increase the number of NFS client connections upto 16 |

| tcp | TCP network transport protocol to communicate with the Qumulo cluster | |

| local_lock | all | The client assumes that both flock and POSIX locks are local |

| EBS Volume Parameter | ||

| Parameter Name | Value | Description |

| IOPS | 16000 | Max IOPS for EBS Volume |

| Throughput | 1000 | Max Throughput for EBS Volume |

Software Configuration and Tuning Notes

None

Service SLA Notes

AWS uses commercially reasonable efforts to make the Included Products and Services each available with a Monthly Uptime Percentage of at least 99.99%, in each case during any monthly billing cycle. The Monthly Uptime Percentage is calculated by subtracting from 100% the percentage of minutes during the month in which any of the Included Products and Services, as applicable, was in the state of “Region Unavailable”.

Storage and Filesystems

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | Elastic Block Storage volume, capacity of 1TB gp3. Each Qumulo node has 6 EBS volume. | 2 Drive or 1 Node Protection with Erasure Coding | AWS EBS | 96 |

| Number of Filesystems | 1 |

|---|---|

| Total Capacity | 78.54 TB |

| Filesystem Type | Qumulo |

Filesystem Creation Notes

The Qumulo Core filesystem is deployed on AWS through either a cloud formation template or Terraform. The Qumulo Core AMI is deployed and the file system is configured as a part of either the automated cloud formation process or via Terraform. No additional file system creation steps are required.

Storage and Filesystem Notes

None

Transport Configuration – Virtual

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 100Gbps Ethernet Virtual NIC | 16 | Used by client machines |

| 2 | 100Gbps Ethernet Virtual NIC | 16 | Used by Qumulo Core for inter-nodal communications as well as communications to any clients. |

Transport Configuration Notes

None

Switches – Virtual

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | AWS | 100Gbps Ethernet with Enhanced Networking | 16 | 16 | Used by client machines |

| 2 | AWS | 100Gbps Ethernet with Enhanced Networking | 16 | 16 | Used by Qumulo Core nodes |

Processing Elements – Virtual

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 1152 | vCPU | c5n.18xlarge Qumulo Core | 3.5 GHz intel Xeon Platinum processors | Qumulo Core, Network communication, Storage Functions |

| 2 | 1152 | vCPU | c5n.18xlarge Qumulo Core | 3.5 GHz intel Xeon Platinum processors | Spec Storage Client Benchmark processors |

Processing Element Notes

None

Memory – Virtual

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| AWS EC2 c5n.18xlarge instance memory | 192 | 16 | V | 3072 |

| AWS EC2 c5n.18xlarge instance memory | 192 | 16 | V | 3072 |

| Grand Total Memory Gibibytes | 6144 | |||

Memory Notes

None

Stable Storage

Qumulo Core utilises Elastic Block Storage (EBS) devices; which provide stable storage.

Solution Under Test Configuration Notes

The solution under test was a standard distributed cluster built utilising Qumulo Core. Qumulo Core clusters can handle large and small file I/O along with metadata-intensive applications. No specialised tuning is required for different or mixed-use workloads.