This blog explains the trade offs cloud architects were required to build around using traditional file systems when constructing AI infrastructure. The blog also explains how Azure Native Qumulo resolves those trade offs, decreasing GPU time and significantly lowering costs without sacrificing performance.

When it comes to executing AI operations at scale, file storage services have failed in optimizing the trade-off between performance and cost efficiency. Deploying AI workflows directly on file storage infrastructure has been impractical, cumbersome, and economically unsustainable.

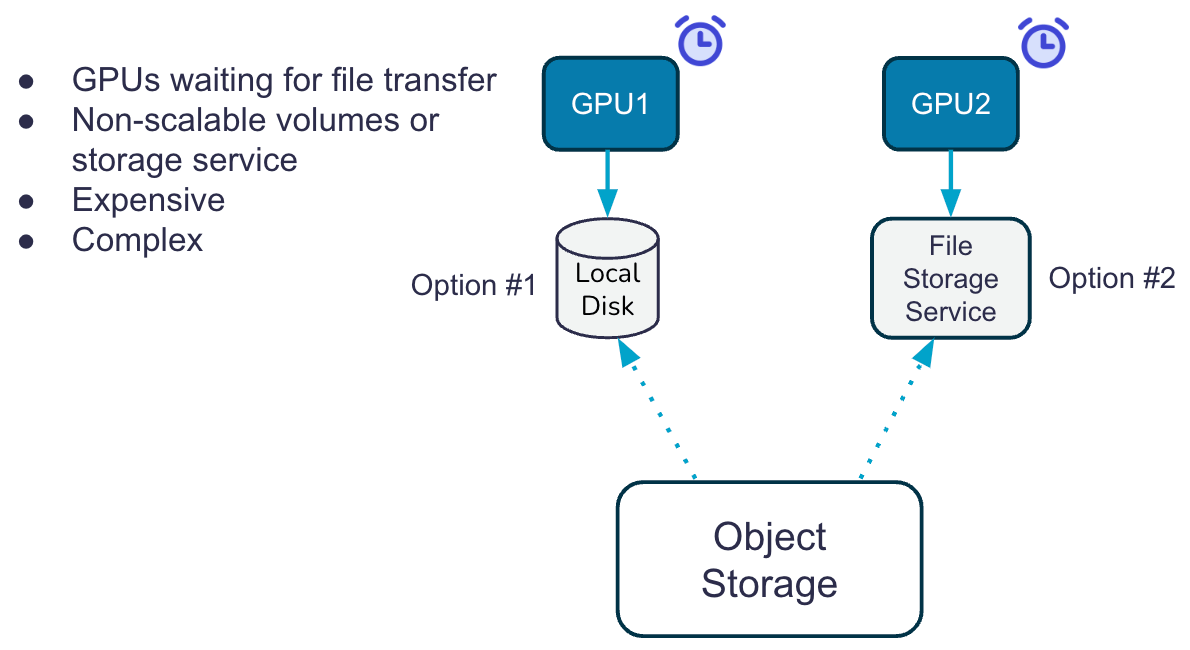

Today, organizations are compelled to construct AI-driven solutions by transferring data from low-cost object storage to high-cost file caches (either local disk or central filesystems), where the AI computational tasks are executed from coveted GPUs. Whether it’s data collection, pre-training, production training, or continuous inference, data movement between storage tiers not only adds complexity but also incurs additional API transaction fees.

A two-tier system using file caches also means that GPUs are kept waiting up to 40% of the time just to load the data from object storage into the file cache. That’s a lot of wasted time for idle GPUs. And worse, with the smaller caches, training data sets are limited to the size of that local cache, requiring multiple load phases to get through larger datasets like images and video.

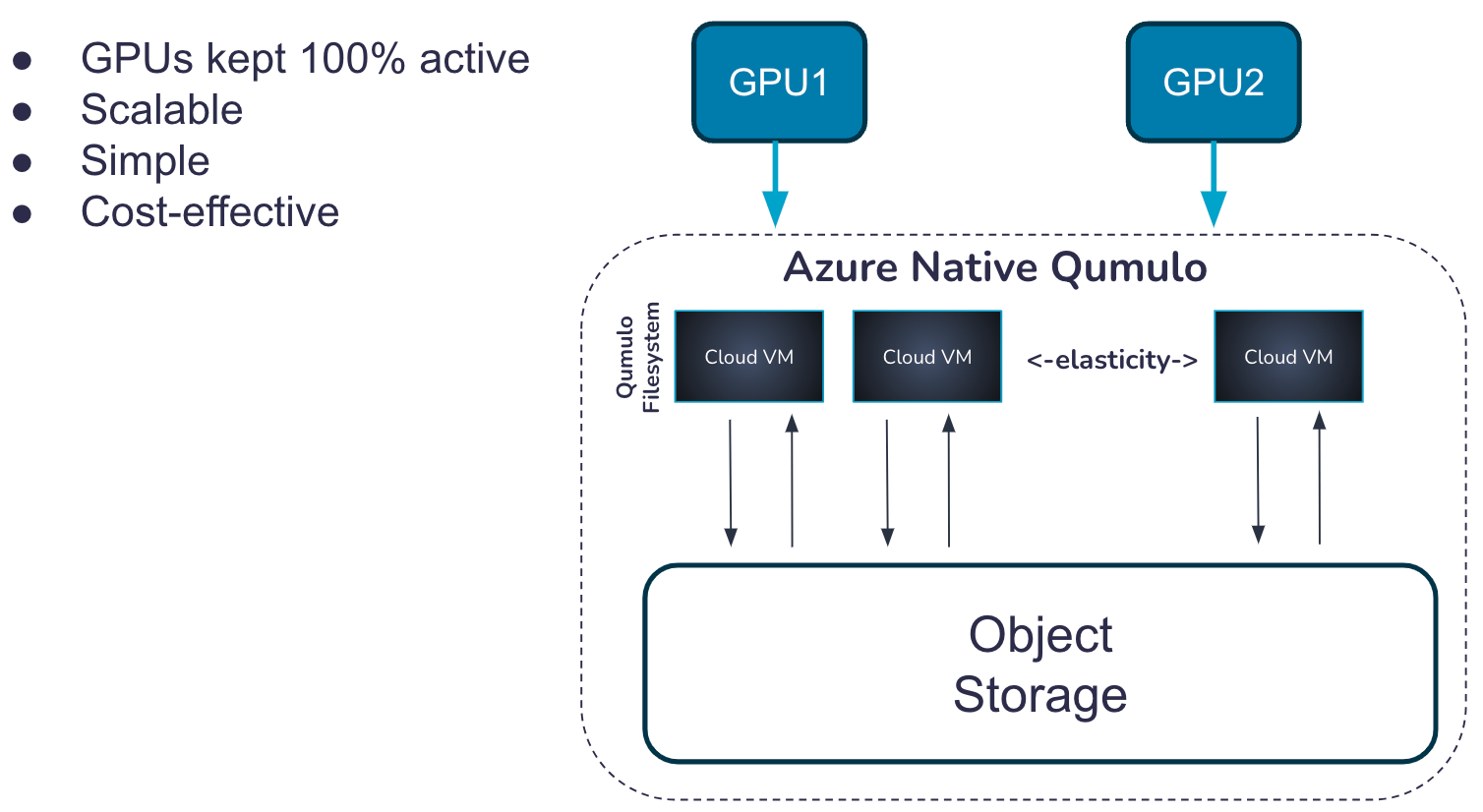

Azure Native Qumulo (ANQ) acts as an intelligent data accelerator for the object store, executing parallelized, prefetched reads served directly from the Azure primitive infrastructure via the Qumulo filesystem to GPUs running AI training models. ANQ accelerates GPU-side performance, eliminating load times between the object layer and the filesystem. This changes how file-dependent AI training in the cloud should be architected, depicted in the image below.

As a proof point, we refer to our latest Spec Storage AI_IMAGE results, demonstrating ANQ’s architecture as the industry’s fastest and most cost-effective cloud-native storage solution.

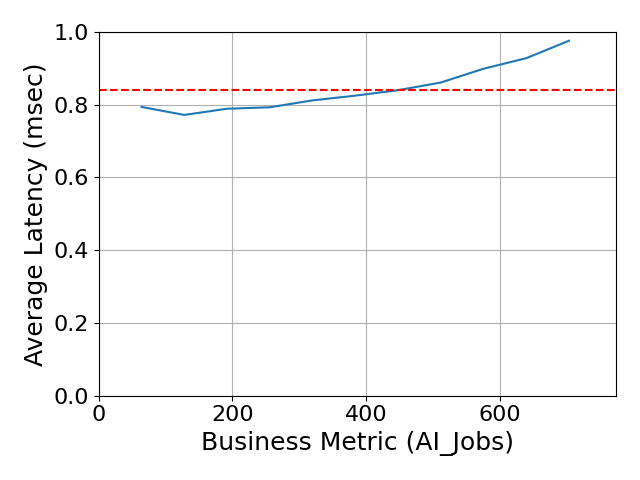

We achieved the top result with an Overall Response Time (ORT) of 0.84ms and a total cost to the customer of $400 at list price over a 5-hour burst period. This is disruptive because our burst cycle was entirely SaaS PAYGO, where metering stopped when the performance wasn’t needed. Most other vendors, including a previous submission at 700 jobs at 0.85ms ORT, do not communicate costs transparently because:

- They include a large, non-elastic deployment of over-sized VMs that you would have to keep running, even after deployment, in order to maintain your data set.

- They require a 1-3 year software subscription, costing hundreds of thousands of dollars, on a software entitlement vs. having a PAYGO consumption model.

These claims *sound* hard to believe, so you might be asking:

- What is so different about ANQ’s architecture that delivers such amazing results?

- How can Qumulo achieve the speed of a Ferrari, with the publicly advertised price of a reliable Toyota Corolla?

- Does this mean I can finally use file storage in the cloud without managing tiering to object?

- What if my performance need fluctuates wildly based on the day of the week or week of the month?

Three simple things allow Qumulo to answer all of these questions and stand confidently behind our claim as the first modern cloud file storage service.

True Elastic Scalability allows customers to focus on other business and technology concerns rather than cloud-native storage infrastructure. The storage performance is ready to scale when the AI application stack demands it, saving cost when there is no demand.

Note: Other cloud file systems fail on this critical capability by operating pre-provisioned “volumes” of fixed capacity. Not really any different from on-prem storage, but far more expensive!

Disruptive Pricing: Qumulo has innovated its way into disruptive pricing, taking advantage of cloud economics; we are passing the savings on to the customer. The disruptive part? You only pay for what you use.

The pricing is simple and is based on two factors: storage usage (TB) and performance needed (throughput and IOPs); ANQ scales performance and capacity dynamically so that there is no need to pre-provision resources in anticipation of demand.

Performance increases linearly as your workload increases. The Azure Native Qumulo filesystem is built on top of the object tier, achieving an average cache-hit ratio across all clusters (on-premises and in-cloud) is north of 95%! The architecture acts as an accelerator that executes parallelized reads which are prefetched from object, and served directly from the filesystem to its clients, which may be GPUs running AI applications. This managed ‘accelerator’ ensures GPU-side scalability and performance without having to wait for load times between the object layer and the filesystem.

- Read cache is serviced from an in-memory L1 cache and a generous NVMe L2 cache. The global read-cache is increased on-demand, elastically. This is why we had a sub-millisecond overall response time for the Spec AI_IMAGE benchmark; the system scaled the cache temporarily to meet the performance requirement!

Behind the read cache is Qumulo’s highly tuned machine learning model that guesses which blocks are most likely to be read next. Trained with years of access patterns from over 1 trillion requests, the model accurately pre-fetches and serves data from the NVMe or L1 cache. - Write transactions leverage high performance Azure Managed disks, which act as a protected write-back cache for incoming writes, continuously flushing them to Azure Blob Storage. Every transaction is journaled, ensuring no single point of data loss in the ANQ architecture. This approach is critical during compute node failures, and is more durable than some of our competitors’ architecture where in-flight writes can be lost during compute events.

- Read cache is serviced from an in-memory L1 cache and a generous NVMe L2 cache. The global read-cache is increased on-demand, elastically. This is why we had a sub-millisecond overall response time for the Spec AI_IMAGE benchmark; the system scaled the cache temporarily to meet the performance requirement!

Inconceivable? We invite you to test for yourself. You can stand up a free 7-day trial of Azure Native Qumulo here. The default config will allow you to see the functionality, but has a safety rate-limiter. If you need more performance, just reach out to hpc-trial-request@qumulo.com.

Interested in learning more? Download our solution brief below.